A technical case study on why artificial intelligence is sometimes more artificial than intelligent – and why this isn’t a Claude-exclusive problem.

AI’s Epic Fail: How an AI Completely Botched a 3-Minute Task in 2 Hours and 12 Restarts

A technical case study on why artificial intelligence is sometimes more artificial than intelligent – and why this isn’t a Claude-exclusive problem.

The Stasi could only dream of Big Tech’s capabilities. A comparison between analog and digital surveillance.

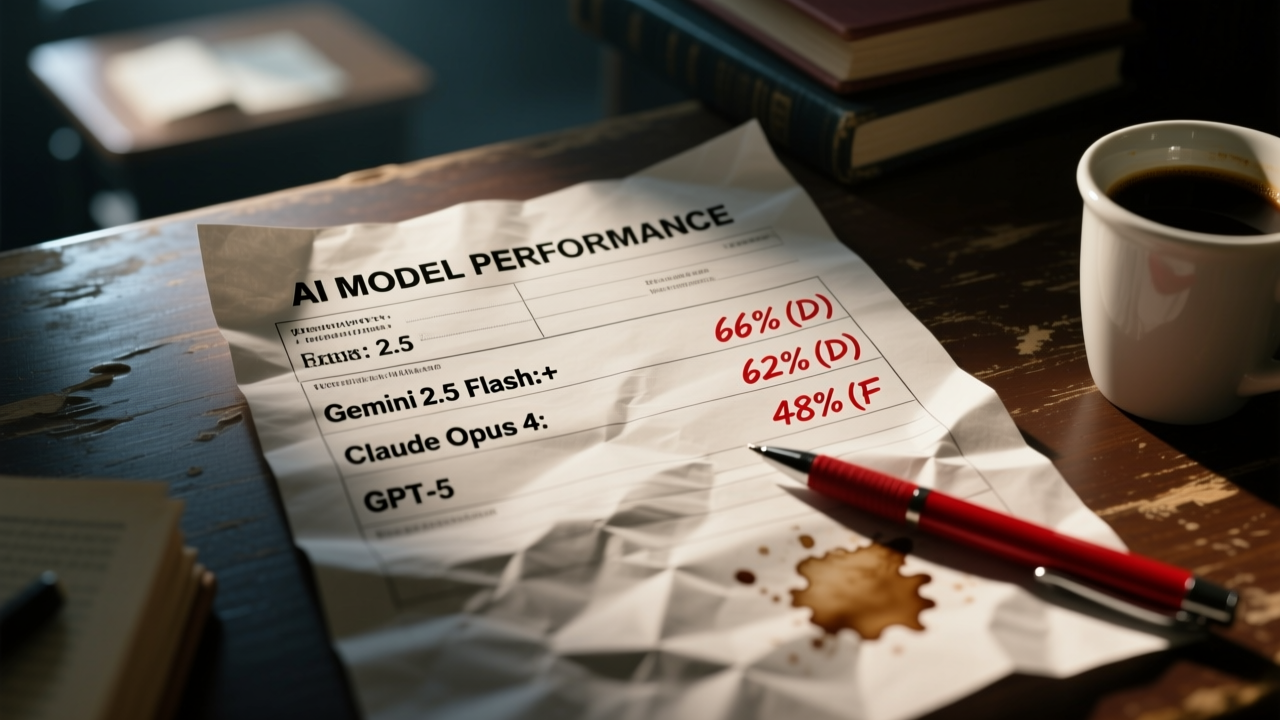

The world’s best AI models fail 1 in 3 tasks. Here’s what the benchmarks really show about GPT-5, Claude Opus 4, and Gemini 2.5 Flash.

Markus Söder has registered a döner kebab trademark. Yes, really. Welcome to 2024, where Bavarian Minister-Presidents register their own fast-food brands.

How Grok scraped an entire GitHub repository, listed all features, admitted ‘dbbackup beats Veeam’ - and still said ‘No.’ 8 times in a row.