The AI Illusion: Technical Reality Behind the Hype

Table of Contents

I don’t want to convince anyone of something they don’t see themselves – that’s pointless.

But I do believe it’s valuable to have an informed opinion. And for that, we need access to alternative perspectives, especially when marketing hype dominates the narrative.

This article combines technical analysis with critical perspectives from leading AI researchers.

Part 1: What LLMs Really Are#

What is an LLM?#

A Large Language Model (LLM) like GPT-4 is a massive statistical engine that predicts the next most likely word in a sentence based on training data. It doesn’t think. It doesn’t understand. It completes patterns.

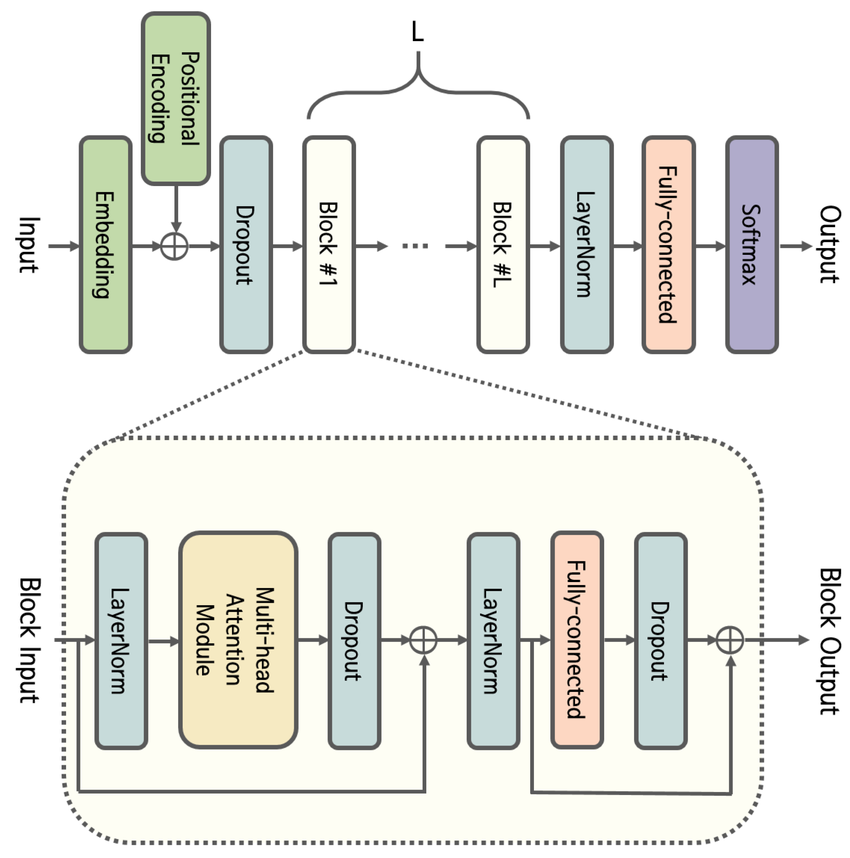

How Transformers Work#

- Inputs (tokens) are converted to vectors.

- Self-attention layers calculate relationships between tokens.

- The model predicts the next token using statistical weighting.

There is no internal world model, no consciousness, no logic engine.

Example:

Input: “The cat sat on the…”

Output: “…mat” (highest statistical likelihood from training data)

Why it’s not Intelligence#

- No understanding of meaning.

- No memory between sessions (unless externally engineered).

- No intention or goal beyond completing patterns.

LLMs are ELIZA on steroids: eloquent, scaled, but fundamentally hollow.

LLMs are like very fast autocomplete machines with a huge memory – not minds.

Source: ResearchGate, CC BY-NC-ND 4.0

Part 2: Why Statistics ≠ Thinking#

Transformer models don’t “think” – they optimize probability.

Their output is impressive, but it’s entirely non-conceptual.

Why Transformers Don’t Think#

Despite the hype, Transformer-based models (like GPT) lack fundamental characteristics of thinking systems:

- No real-world grounding

- No understanding of causality

- No intentions or goals

- No model of self or others

- No abstraction or symbol grounding

- No mental time travel (memory/planning)

They are statistical mirrors, not cognitive agents.

A Transformer is not a mind. It’s a sophisticated parrot with vast echo chambers.

Neural ≠ Human#

Transformers are not brain-like. They don’t emulate cortical processes, dynamic learning, or biological feedback loops.

They are pattern matchers, not pattern understanders.

In neuroscience, intelligence is not just prediction — it’s about integration of sensory input, memory, context, and motivation into purposeful behavior. Transformers do none of this.

See:

- Rodney Brooks – Intelligence without representation

- Yoshua Bengio – System 2 Deep Learning and Consciousness

- Karl Friston – Active Inference Framework

Deceptive Surface, Missing Depth#

Transformers simulate fluency, not understanding.

They can:

- Imitate a legal argument

- Compose a poetic reply

- Continue a philosophical dialogue

But they do not:

- Know what a contract is

- Grasp the emotional weight of a metaphor

- Reflect on the meaning of a question

This is the ELIZA effect at scale: We project cognition into statistical output.

Transformers as a Dead End?#

The current AI trajectory is caught in a local maximum:

More data – bigger models – better output… but no step toward real cognition.

Scaling does not equal understanding.

True AI may require:

- Symbol grounding

- Embodiment

- Continual learning

- Causal reasoning

- Cognitive architectures beyond Transformers

See:

- Gary Marcus – Deep Learning Is Hitting a Wall

- Timothy Shanahan – Transformers lack abstraction

- Neurosymbolic approaches – MIT-IBM Watson AI Lab

Part 3: Voices of Critical AI Research#

Here are key voices from leading AI researchers who critically examine the label “Artificial Intelligence” and the risks it implies:

Emily M. Bender: “Stochastic Parrots”#

Emily Bender coined the term “Stochastic Parrots” to describe how models like ChatGPT generate statistically plausible text without any real understanding.

👉 ai.northeastern.edu

👉 The Student Life

Timnit Gebru: Structural Change for Ethical AI#

Timnit Gebru emphasizes the need for systemic reform to enable ethical AI development.

👉 WIRED

Gary Marcus: Regulation Against AI Hype#

Gary Marcus calls for strong governmental oversight to prevent harm from unregulated AI systems.

👉 Time

Meredith Whittaker: AI as Surveillance Capitalism#

Meredith Whittaker sees AI as rooted in systemic data exploitation and power concentration. She warns that agentic AI could compromise application-level security and threaten the privacy of millions of users.

Whittaker emphasizes that the major AI players develop technologies with surveillance as a business model. These services are initially free, but in the background, vast amounts of data are generated, stored, and made available to the advertising industry.

👉 Observer – Signal’s Whittaker on AI Privacy Risks

👉 The Economist – AI agents are coming for your privacy

👉 WIRED – How Meredith Whittaker Remembers SignalGate

Sandra Wachter: Right to Explainability#

Sandra Wachter calls for legal frameworks to ensure algorithmic accountability and fairness. Her work focuses on the legal and ethical implications of big data, artificial intelligence, and algorithms.

Wachter has highlighted numerous cases where opaque algorithms have led to discriminatory outcomes, such as discrimination in applications at St. George’s Hospital in the 1970s and overestimations of Black defendants reoffending when using COMPAS.

She developed tools like counterfactual explanations, which allow for the interrogation of algorithms without revealing trade secrets. This approach has been adopted by Google on TensorBoard and by Amazon in their cloud services.

Conclusion#

Transformers are linguistic illusions.

They simulate competence — but have none.

The path to real AI won’t come from scaling up language models.

It will come from redefining what intelligence means — not just what it sounds like.

LLMs are powerful tools, but calling them “intelligent” is misleading.

This site exposes how this false label is used to manipulate public perception.

We should stop asking: “How good does the output sound?”

And start asking: “What kind of system produces it?”

Related Posts

- AI Soberly Considered: Why Large Language Models Are Brilliant Tools – But Not Magic

- Grok Is Fucked - A Deep Dive into Its Limitations and Failures

- When AI Meets AI: A Meta-Experiment in Pattern Recognition

- 'ELIZA''s Rules vs. GPT''s Weights: The Same Symbol Manipulation, Just Bigger'

- iPhone 16 AI: Apple's Surveillance Revolution in Privacy Clothing