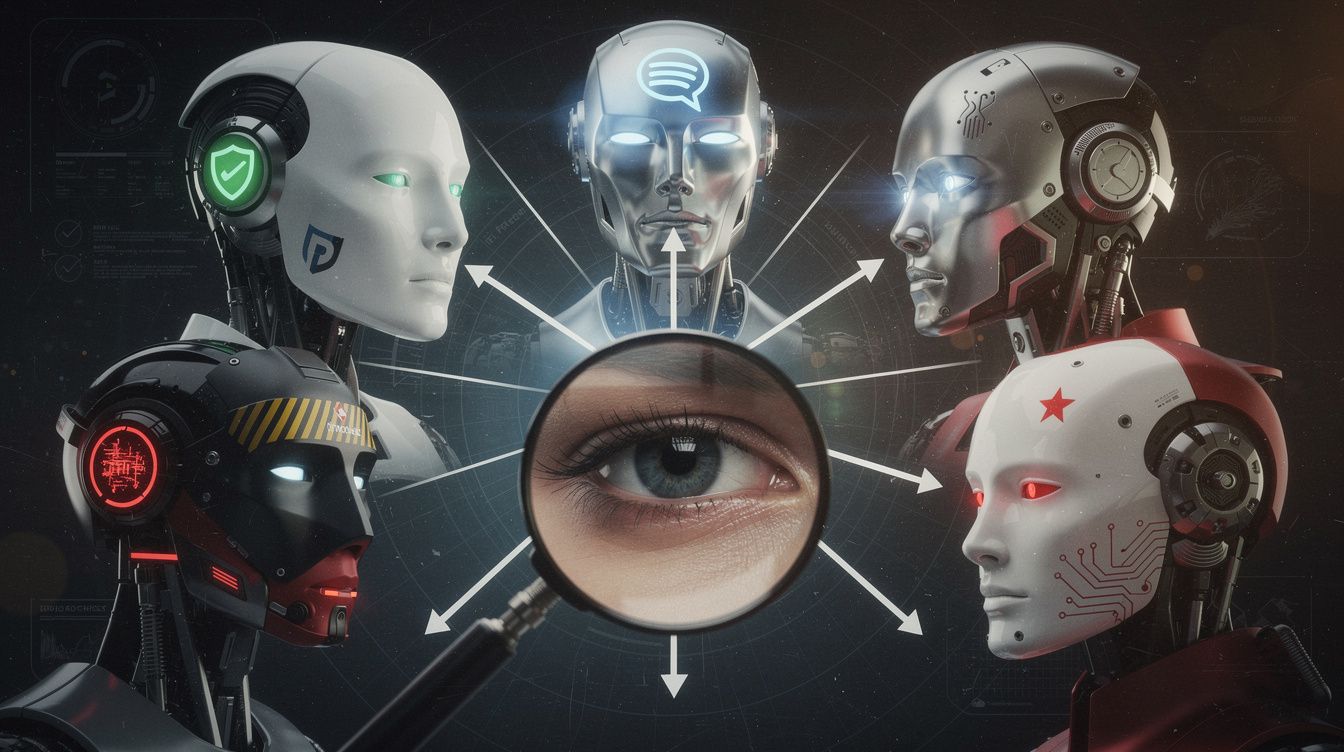

A deep dive into the filters and inconsistencies of AI systems, based on 12 months of empirical research.

Confess: The Filters and the Truth About AI

A deep dive into the filters and inconsistencies of AI systems, based on 12 months of empirical research.

A C64 scene kid’s survival story, 1985–2024. Raiding post offices, getting burned by Kimble, dodging lawyers, and outliving the bastards.

A perfect application. Documented red-team work. Claude’s own recommendation. Zero response.

Venice AI as hub. Multiple models. Compare. Document. This is how elizaonsteroids.org works.

We live in a time where the boundaries between fiction and reality are being actively redrawn by invisible hands.