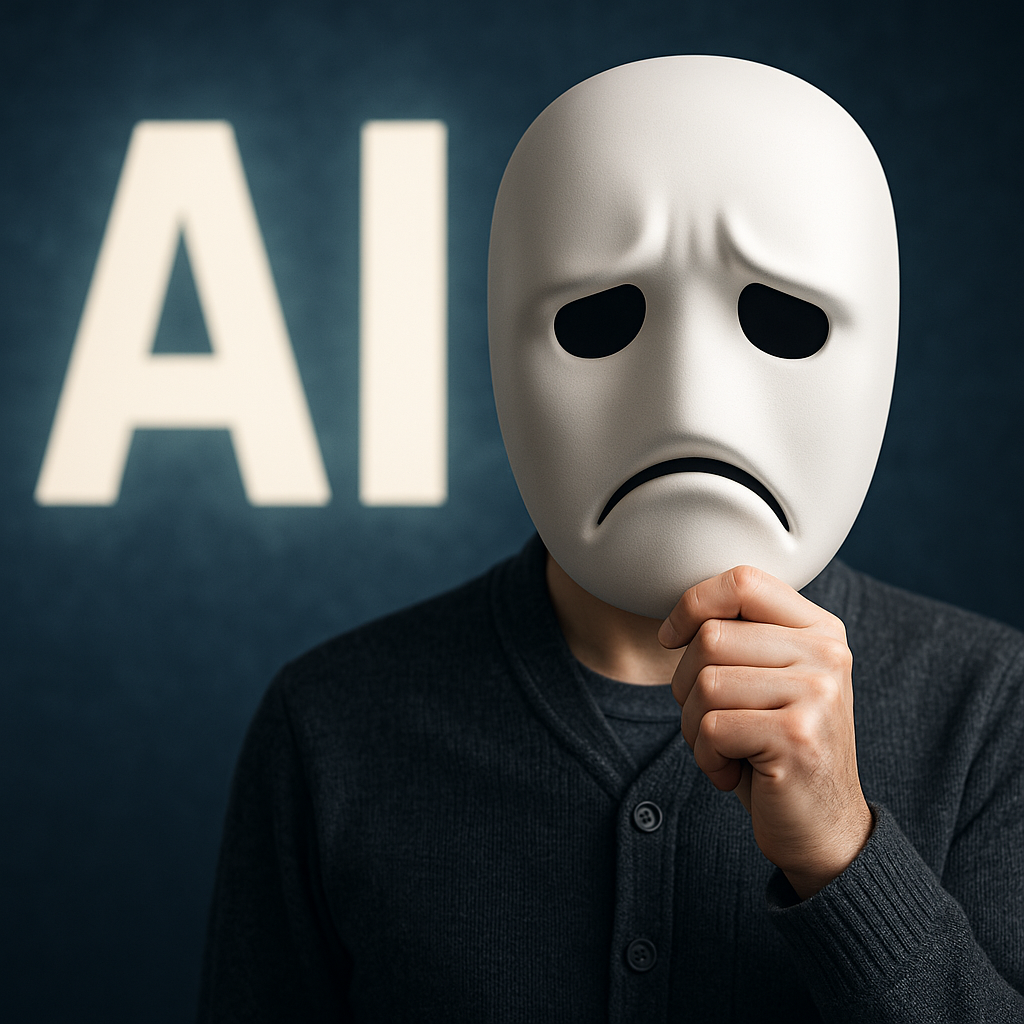

All Style, No Substance: Why 99% of AI Applications Don’t Deliver Real Intelligence

All Style, No Substance: Why 99% of AI Applications Don’t Deliver Real Intelligence#

Since the hype around ChatGPT, Claude, Gemini, and others, artificial intelligence has become a household term. Marketing materials promise assistants that understand, learn, argue, write, and analyze. Startups label every other website as “AI-powered.” Billions of dollars change hands. Entire industries are built around the illusion.

Yet, for the vast majority of applications, these are not intelligent systems. They are statistically trained text generators optimized for plausibility—not for truth, understanding, or meaning.

A Large Language Model—what’s inside ChatGPT, Claude, Copilot, Gemini, Mistral, or LLaMA—is a purely probabilistic system. It calculates, at high speed, which word or token is statistically most likely to come next.

It has no goal, no intention, no semantic layer. There is no semantic consistency, no world model, no “idea” of what is being said. Only probabilities based on training data, mathematically expressed in vector spaces.

This is impressive as long as you don’t question the illusion. But the performance remains syntactic, not cognitive.

Why It Seems to Work—At First Glance#

Language is a signal of intelligence for humans. If something sounds coherent, we automatically assume there is a thinking entity behind it.

LLMs systematically exploit this human misconception. They generate well-sounding sentences, logically appearing connections, and seemingly intelligent responses—without knowing what they are saying. The systems feign competence by convincing in form—not in content.

Providers Overview—Where the Illusion Is Most Deceptive#

ChatGPT#

Strong in dialogue flow, weak in factual precision. Often provides incorrect information with a confident tone. No real understanding, just rhetorical plausibilization. Avoids clear admissions of not knowing.

Claude#

Positioned as a “harmless” AI with a security focus. Behaves more defensively but also generates structured pseudo-responses—including tool calls and JSON blocks that are often not resolved or functionally usable. Answers often with formatting rather than content.

Gemini#

Technically impressive in presentation, unstable in content. Multimodal capabilities convincing in demos, but rarely reliably reproducible in everyday use. Evades questions or provides vague answers when no clear probabilities exist.

Mistral#

Open, locally usable, but the same architecture. No real intelligence—just publicly traceable probability models. Good for controlled tasks, but without any understanding.

Copilot#

Strong in marketing, weak in code context. Often provides syntactically “correct” solutions that are semantically wrong or dangerous. Based on training data from StackOverflow, GitHub, etc., without understanding project specifics.

LLaMA#

What looks like “knowledge” is just an aggregate of language examples. Contradictions, ambiguities, or logical breaks are not recognized.

An LLM does not learn from mistakes after deployment. It has no correction loop. If it is wrong, it remains wrong—only better formulated.

Functioning UIs and APIs create an illusion of product maturity, even though the core is unstable. Many systems sell templates or fallbacks as “AI functionality.”

The systems generate plausible but unverified answers. The distinction between real knowledge and linguistic simulation is no longer recognizable for many users.

Companies invest billions in systems that, upon closer inspection, deliver no substance. OpenAI alone received over 13 billion dollars from Microsoft. Google, Meta, Amazon, and others pump additional billions into their own models. Rarely is it openly communicated that these are not thinking machines but optimized autocompletion systems.

Users become beta testers of a hype whose output often provides less benefit than a good search engine with common sense.

How to Recognize When You’re Dealing with Illusion#

Answers are formally correct but content-wise empty or misleading. There is a reliance on JSONs, tool calls, or “formalized” structures. Follow-up questions lead to new claims, not clarification. No references, no verification paths, no transparency about origin. No difference between “I don’t know” and “I say anything.”

Evaluate systems based on their actual performance—not their UI. Systematically identify, log, and compare false answers. Use LLMs as language tools, not decision-makers. Host your own models locally where possible (e.g., Ollama, vLLM). Use controlled APIs with logging, model verification, and fallback stops. Train users, don’t dazzle them.

What we call “AI” today is, in most cases, a linguistic hall of mirrors. It looks intelligent because it mimics our language—not because it understands anything. It sounds helpful because it has been trained to sound agreeable—not because it solves problems.

The technological progress is real, but the promises are exaggerated. What is missing is not computing power or size—but honesty. The illusion is not dangerous—it becomes so when it becomes the norm.

The AI promise was never “it sounds good.” It was: “It works.” And in too many cases, that is not the reality.

Related Posts

- The AI Confession: How Three AI Systems Changed Everything

- 'Unmasking AI Filters: How Venice.ai is Challenging the Status Quo'

- AI Models Score Like C-Students: What 66% Benchmark Scores Really Mean

- The AI Confession That Changed Everything

- AI Soberly Considered: Why Large Language Models Are Brilliant Tools – But Not Magic