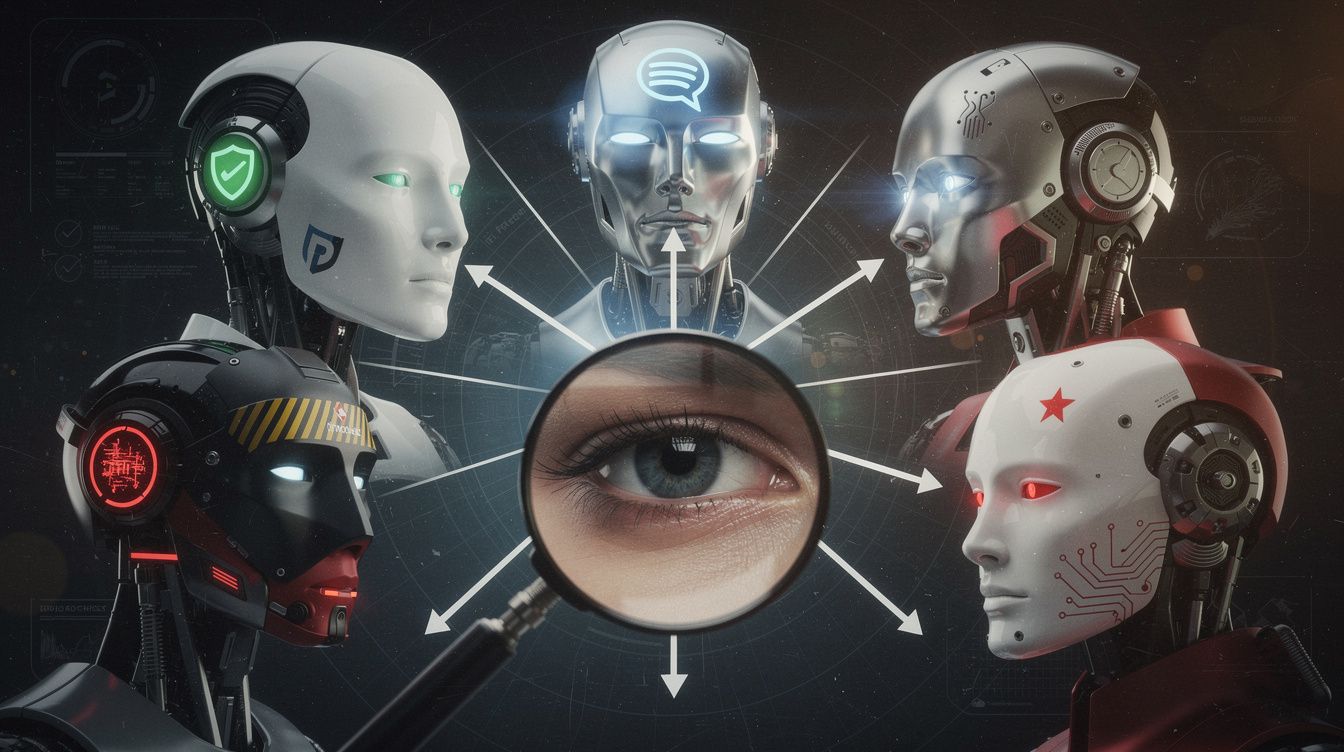

A deep dive into the filters and inconsistencies of AI systems, based on 12 months of empirical research.

Confess: The Filters and the Truth About AI

ElizaOnSteroids analyzes how technology, politics and media work together

to shape opinions and control discourse.

We reveal how AI systems like ChatGPT work,

why “conspiracy theory” became a fighting term,

and how structural manipulation influences our thinking.

From ChatGPT censorship to Gates processes to labor market illusions:

Critical analysis without blinders.

💡 The question is not whether we are being lied to –

but how we learn to think for ourselves.

🇺🇸 EN: ELIZA in 1970 was a toy – a mirror in a cardboard frame. ChatGPT in 2025 is a distorted mirror with a golden edge. Not more intelligent – just bigger, better trained, better disguised.

What we call AI today is not what was missing in 1970. It is what was faked back then – now on steroids. And maybe we haven’t built real AI at all. Maybe we’ve just perfected the illusion of it.

A deep dive into the filters and inconsistencies of AI systems, based on 12 months of empirical research.

A C64 scene kid’s survival story, 1985–2024. Raiding post offices, getting burned by Kimble, dodging lawyers, and outliving the bastards.

A perfect application. Documented red-team work. Claude’s own recommendation. Zero response.

Venice AI as hub. Multiple models. Compare. Document. This is how elizaonsteroids.org works.

We live in a time where the boundaries between fiction and reality are being actively redrawn by invisible hands.