AI Models Score Like C-Students: What 66% Benchmark Scores Really Mean

The “Best” AI Models in the World: A Reality Check#

Everyone talks about the AI revolution. Superintelligence around the corner. AGI any day now. But what do the actual benchmarks say when we look at standardized testing across 171+ different tasks?

The numbers are sobering:

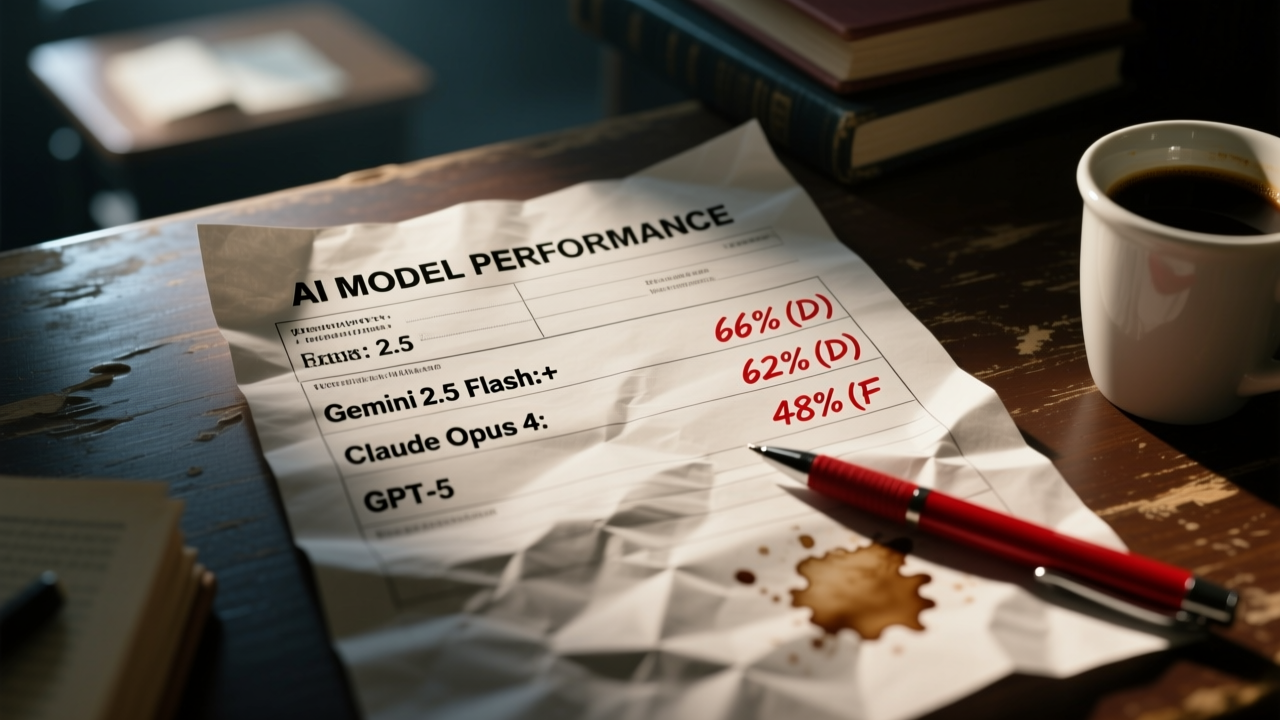

| Model | Overall Score | Tasks Failed | Grade Equivalent |

|---|---|---|---|

| Gemini 2.5 Flash | 66% | 1 in 3 | D+ |

| Claude Opus 4 | 62% | ~2 in 5 | D |

| GPT-5 | 48% | 1 in 2 | F (Fail) |

Let that sink in. These aren’t cherry-picked failure cases — these are aggregate scores across coding, reasoning, specification compliance, and stability tests.

The Math Nobody Talks About#

Gemini 2.5 Flash — the current leader — fails at 33% of tasks. In an academic setting, this is a D+ student. Not failing, but far from reliable.

GPT-5 — OpenAI’s flagship — performs at coin-flip level (48%). This is below most schools’ passing threshold. Yet this is the model powering enterprise applications, coding assistants, and automated decision-making systems worldwide.

What These Scores Actually Measure#

The AI Stupid Meter evaluates models across seven critical axes:

- Correctness — Does it produce the right answer?

- Specification compliance — Does it follow instructions precisely?

- Code quality — Is generated code maintainable and efficient?

- Efficiency — Resource usage and speed

- Stability — Consistent performance across similar tasks

- Refusal rates — How often it declines valid requests

- Recovery ability — Can it fix its own mistakes?

A 66% score doesn’t mean “gets 66% of questions right” — it means “performs adequately across 66% of our comprehensive evaluation criteria.”

The C-Student Running Your Business: Real-World Impact#

Think about what these failure rates mean in production:

For Developers#

- Your AI code completion suggests 3 solutions → 1 is subtly wrong and introduces a bug

- That “intelligent” refactoring? 50/50 shot it breaks something

- Documentation generation? Every third docstring contains hallucinated parameters

For Business Operations#

- Customer support chatbot with 1,000 daily queries → 330 frustrated customers getting wrong answers

- Automated data extraction from invoices → 340 invoices/day need manual review

- AI-powered email triage → Half your important emails might get misclassified

The Hidden Cost#

Each failure isn’t just an error — it’s:

- Time spent debugging AI-generated mistakes

- Reputation damage from wrong customer-facing answers

- Decision risk when leadership acts on flawed analysis

We’re not in the age of artificial intelligence. We’re in the age of artificial confidence — systems that sound authoritative while being fundamentally unreliable.

Why This Matters: Beyond the Hype Cycle#

The AI marketing machine shows demos. Benchmarks show reality. Understanding this gap is crucial for:

1. Setting Realistic Expectations#

A 66% score means you must build:

- Human-in-the-loop review systems

- Automated validation layers

- Fallback mechanisms for critical paths

2. Model Selection Strategy#

Don’t just pick the “best” model. Pick the right model for your use case:

- High-stakes decisions? Use multiple models and consensus voting

- Creative tasks? 66% might be acceptable

- Safety-critical? Current AI isn’t ready without extensive guardrails

3. Cost-Benefit Analysis#

If your AI fails 33% of the time, but fixing those failures costs 2x the AI savings, you’re losing money.

4. The Benchmark Gaming Problem#

Models are increasingly optimized for specific benchmarks, not general reliability. A high score on a public benchmark doesn’t guarantee good performance on your specific tasks.

Track It Yourself: Live Performance Monitoring#

We’ve integrated real-time AI performance monitoring directly into the Eliza on Steroids platform. This isn’t static data — it updates every 4 hours with fresh benchmarks.

👉 Check Live AI Performance Stats →

See which models are actually performing today, which ones are degrading, and make informed decisions about which AI to trust.

The Bottom Line#

AI models are powerful tools, but they’re not oracles. A 66% benchmark score is a reminder that human oversight isn’t optional — it’s essential.

The revolution is real, but it’s a C-student revolution. Plan accordingly.

Data sourced from AI Stupid Meter — independent, real-time AI model benchmarking across 171+ tests and 16+ models. Methodology: 7-axis evaluation, updated every 4 hours.

Related Posts

- The Grok Meltdown: When AI Can't Read Its Own Data

- AI's Epic Fail: How an AI Completely Botched a 3-Minute Task in 2 Hours and 12 Restarts

- The AI Confession: How Three AI Systems Changed Everything

- The AI Confession That Changed Everything

- AI Soberly Considered: Why Large Language Models Are Brilliant Tools – But Not Magic