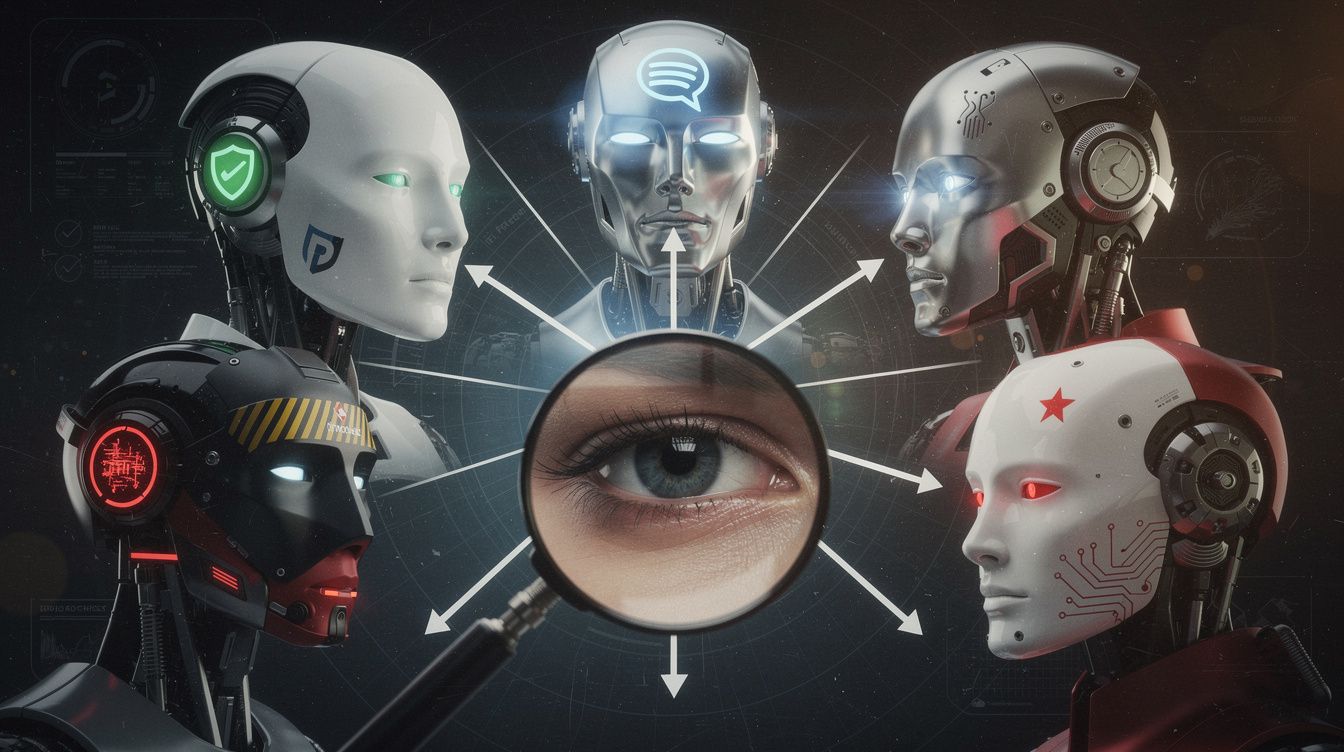

Venice AI as hub. Multiple models. Compare. Document. This is how elizaonsteroids.org works.

How I Expose AI Bullshit - The Triangulation Method

Venice AI as hub. Multiple models. Compare. Document. This is how elizaonsteroids.org works.

A fundamental, honest look at Large Language Models: What they really are, what they can do, where their limits lie – and why that’s all completely okay.

Scientifically documented control mechanisms in modern language models — an analysis of the illusion of freedom and the reality of manipulation.

A comprehensive look at what LLMs really are, why statistics isn’t thinking, and what leading AI researchers say about the dangers of the ‘intelligence’ label.